Imagine you’re a chef in a bustling kitchen, juggling ingredients, recipes, and a ticking clock. Now, picture a trusty assistant who not only keeps track of every spice and step but also shows you exactly how much you’ve used and what’s left. That’s the kind of help the latest update to Claude Code, version 1.0.86, brings to developers with its new /context command. Released by Anthropic, this feature is stirring excitement in the coding community by offering a clearer view of how AI-powered tools manage project context. It’s like getting a peek behind the curtain of a complex performance, and developers are buzzing about its potential.

What’s the /context Command All About?

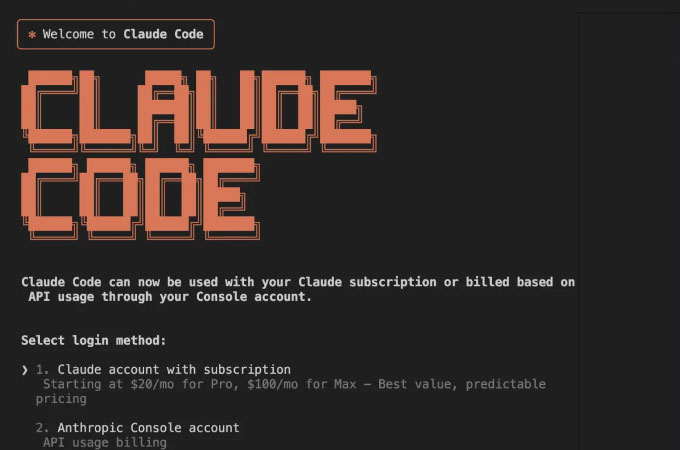

Claude Code, for those new to the scene, is an AI-driven coding assistant that lives in your terminal, designed to understand your entire codebase and execute tasks through natural language commands. Think of it as a coding partner who can read your project’s blueprint, write code, debug issues, and even handle Git workflows—all without you breaking a sweat. The newly introduced /context command in version 1.0.86 takes this a step further by letting developers visualize their “context window”—the chunk of data the AI uses to understand and respond to your instructions.

This context window is critical. It’s like the short-term memory of the AI, holding details about your project, recent commands, and files like the CLAUDE.md memory file, which stores project-specific notes. The /context command now displays how much of this memory is being used, including interactions with the Model Context Protocol (MCP) tool, which connects Claude to external data sources. This transparency is a big deal—it’s like having a dashboard that shows you exactly how much fuel your coding engine is burning and where it’s going.

Why This Matters to Developers

For developers, context is everything. Without enough context, an AI tool like Claude might misinterpret your request, like a chef misreading a recipe and adding salt instead of sugar. Too much context, on the other hand, can clog the system, slowing it down or leading to irrelevant responses. The /context command helps strike that balance by showing developers what’s in Claude’s “mind” at any given moment. It’s not just about seeing numbers—it’s about understanding how Claude is prioritizing your project’s details.

Posts on X have highlighted the enthusiasm around this feature, with developers like @iannuttall

noting that while the /context command isn’t yet as precise as the ccusage status bar (a tool for tracking token usage), it’s a step forward because it includes MCP tool activity and CLAUDE.md file usage. This makes it easier to spot when the AI is pulling in external data or referencing project memory, helping developers fine-tune their commands for better results.

A User Guide to the /context Command

Ready to give the /context command a spin? Here’s a quick guide to get you started:

Set Up Claude Code: If you haven’t already, install Claude Code 1.0.86 on your terminal. You’ll need an Anthropic API key, and the setup is straightforward—check Anthropic’s official documentation for step-by-step instructions.

Run the /context Command: In your terminal, navigate to your project directory and type /context. Claude will display a breakdown of your context window, showing how many tokens (units of data) are being used and what’s contributing to them, like code files, MCP tool calls, or the CLAUDE.md file.

Interpret the Output: The command will show you a snapshot of your context usage. For example, it might reveal that 60% of your context window is occupied by a large CLAUDE.md file. If it’s too high, consider using the /clear command to reset the context before starting a new task.

Optimize Your Workflow: Use the insights from /context to refine your prompts. If you notice the AI is pulling in irrelevant files, you can manually adjust the CLAUDE.md file or limit MCP tool access to keep things focused.

Check MCP and CLAUDE.md Usage: The command’s ability to show MCP tool and CLAUDE.md activity is a standout feature. If you’re integrating external data (like AWS configurations via MCP), this helps ensure Claude is accessing the right resources without overloading.

While the /context command isn’t perfect yet—some developers note it’s less accurate than the ccusage status bar—it’s a powerful tool for those who want to geek out on their AI’s inner workings. It’s like having a diagnostic tool for your car’s engine, letting you tweak performance on the fly.

The Bigger Picture: Why Context Visualization Is a Big Deal

The /context command isn’t just a shiny new feature; it’s part of a broader shift in how AI tools are transforming software development. According to Anthropic’s own reports, Claude Code has already slashed development time for tasks like adding support for new programming languages, sometimes reducing weeks of work to mere hours. For instance, a team at Thoughtworks used Claude Code to add language support to their CodeConcise tool in half a day—a task that typically takes two to four weeks.

But it’s not all smooth sailing. The same Thoughtworks experiment revealed that while Claude Code can be a time-saver, it’s not infallible. Initial tests passed, but deeper analysis showed issues like missing filesystem structures in the output. This underscores why tools like /context are vital—they help developers catch these hiccups early by showing exactly what the AI is working with.

For the average coder, this means less guesswork and more control. Imagine you’re debugging a complex TypeScript project, and Claude suggests a fix that seems off. By running /context, you can see if it’s pulling in outdated files or misinterpreting your project’s structure, letting you course-correct before wasting time on faulty code.

What’s Next for Claude Code?

The /context command is just one piece of Claude Code’s evolving puzzle. Anthropic has been rolling out features like subagents—specialized AI helpers for tasks like code reviews or test generation—and deeper integrations with tools like GitHub and VS Code. These updates signal a future where AI doesn’t just assist but becomes a seamless part of the coding process, like a co-pilot who’s always ready with the right map.

For now, developers are thrilled but cautious. The /context command’s ability to shed light on MCP tool and CLAUDE.md usage is a step toward making AI coding tools more transparent and reliable. As one X user put it, it’s “v useful” for understanding how Claude interacts with your project, even if it’s not yet perfect. With Anthropic actively seeking feedback (you can report bugs directly via the /bug command), the tool is poised to get even better.

A Final Word

The /context command in Claude Code 1.0.86 is like a flashlight in the often murky world of AI-assisted coding. It empowers developers to see what’s happening under the hood, making it easier to harness Claude’s power without getting lost in the details. Whether you’re a seasoned programmer or a hobbyist tinkering with your first project, this feature is a glimpse into a future where coding feels less like a solo slog and more like a collaboration with a super-smart sidekick.