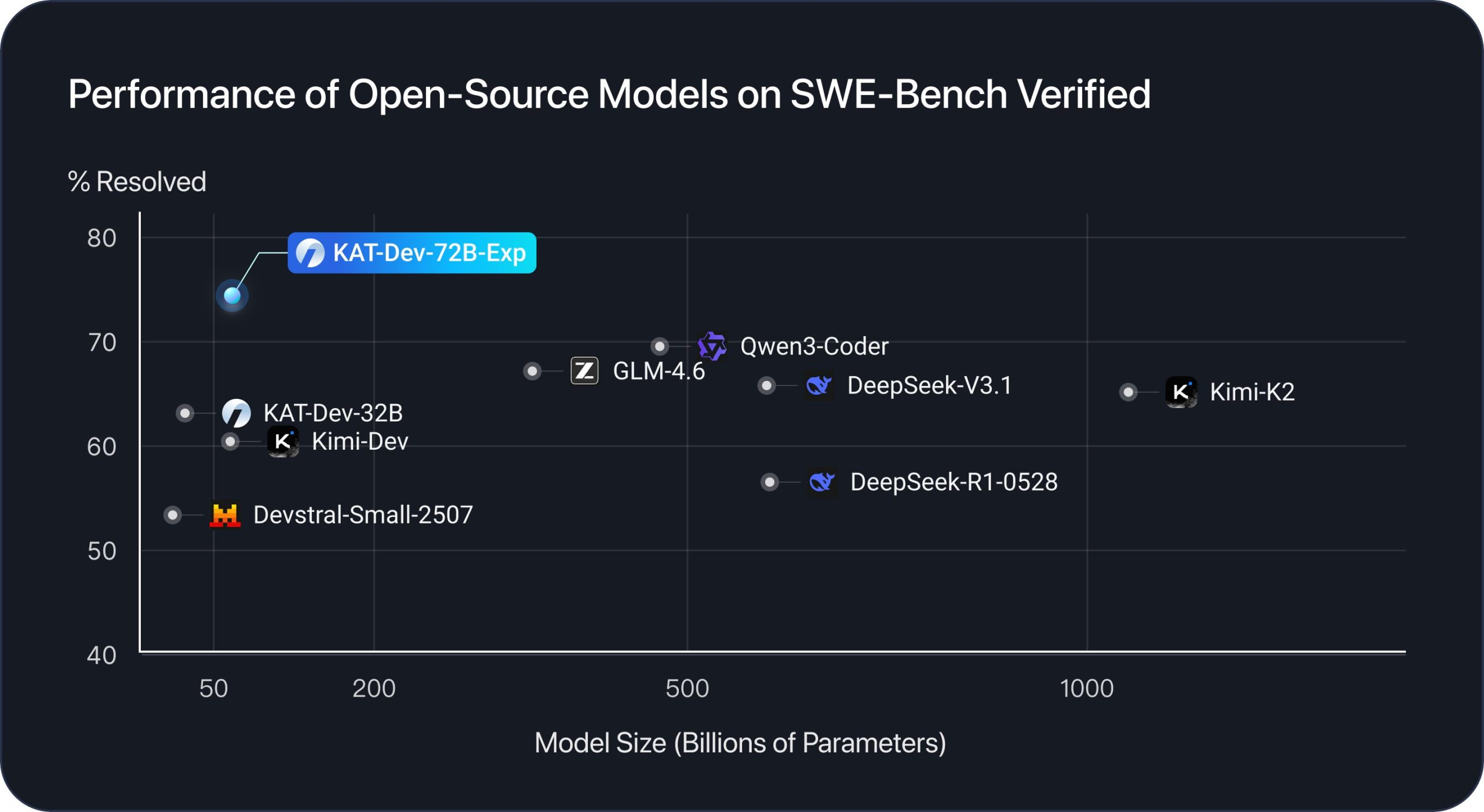

Ever stared at a tangled mess of code, wondering if an AI could swoop in like a digital detective and fix it faster than you can brew your morning coffee? Well, hold onto your keyboards, because KwaiAI—the Chinese tech giant behind the addictive short-video app Kwai— just unleashed KAT-Dev-72B-Exp, an open-source coding model that’s turning heads in the AI world. This 72-billion-parameter powerhouse isn’t just another chatbot; it’s a software engineering wizard that nailed a jaw-dropping 74.6% on the notoriously brutal SWE-Bench Verified benchmark, snagging the crown as the top open-source performer out there. And get this: It reportedly stacks up toe-to-toe with heavy hitters like Anthropic’s Claude 4.5 Sonnet, all while being totally free for tinkerers and pros alike. In a field where closed-door labs hoard the best toys, this feels like Christmas came early for developers everywhere.

Let’s break it down without the jargon overload. SWE-Bench is like the ultimate boot camp for AI coders: It throws real-world GitHub issues at models—think debugging ancient repos or patching security holes—and scores them on how cleanly they resolve the chaos. Most AIs fumble here because coding isn’t about spitting out snippets; it’s a chain of decisions, trial-and-error, and not derailing into nonsense. KAT-Dev-72B-Exp? It struts in with 74.6% accuracy when tested under the strict SWE-agent framework, blowing past rivals like Moonshot AI’s Kimi-Dev-72B (which hit 60.4%) and even edging out leaderboard staples like Claude 4 Sonnet’s 64.93%. That’s not hype; it’s the kind of leap that could mean fewer late-night debugging marathons for you or your team.

The secret sauce? KwaiAI’s crew didn’t just scale up parameters—they got clever with reinforcement learning (RL), that feedback-loop magic where AI learns from rewards like a kid acing a video game. But here’s where it shines: They overhauled the training engine to handle “shared prefix trajectories,” basically letting the model reuse smart starting paths across similar problems, slashing compute waste without skimping on smarts. Then there’s the entropy-shaping trick—a nifty tweak to the reward system that keeps the AI from getting stuck in ruts (aka “exploration collapse”). Imagine training a puppy: Without it, the pup might obsess over one toy and ignore the yard; with it, you nudge rewards to encourage sniffing around, boosting variety and long-term wins. This combo amps up efficiency, letting a massive 72B model train at scale without melting servers or budgets. It’s proof that in AI, brains beat brute force—echoing how human coders iterate on drafts, catching slips before they snowball.

Why does this matter beyond lab coats? For starters, it’s a game-changer for indie devs, startups, or anyone knee-deep in code reviews. Picture auto-fixing that pesky React bug or refactoring legacy Python without the headache. And since it’s open-source under Apache 2.0, the floodgates are open for remixing—folks are already buzzing on Reddit about fine-tunes for local setups. Sure, it’s a research preview of their proprietary KAT-Coder (no chit-chat fluency here, just pure coding grit), but that raw edge makes it a thrilling sandbox for pushing boundaries.

Want to dip your toes in? Here’s a dead-simple guide to fire it up—no PhD required. You’ll need Python with the Hugging Face Transformers library (pip install transformers if you’re starting fresh). Head to the model’s Hugging Face page, download the files, and paste this into a Jupyter notebook or script:

python

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

# Load the goodies

model_name = "Kwaipilot/KAT-Dev-72B-Exp"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype=torch.bfloat16, # Keeps it lightweight

device_map="auto" # Auto-spreads across your GPU/CPU

)

# Craft a prompt—like fixing a buggy function

prompt = "Fix this Python code that crashes on empty lists: def divide(a, b): return a / b"

messages = [{"role": "user", "content": prompt}]

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

inputs = tokenizer([text], return_tensors="pt").to(model.device)

# Generate the fix—tweak max_new_tokens for longer outputs

outputs = model.generate(**inputs, max_new_tokens=512, temperature=0.6)

fixed_code = tokenizer.decode(outputs[0][len(inputs.input_ids[0]):], skip_special_tokens=True)

print("AI's fix:", fixed_code)Run it, and watch the model chew through your prompt. Pro tip: For SWE-style tasks, dial temperature to 0.6 and cap turns at 150 to mimic benchmark vibes. If you’ve got beefy hardware (think A100 GPUs), it’ll hum; otherwise, quantized versions might pop up soon from the community. Just remember, it’s research-grade—test thoroughly before production.

Releases like this get my coder heart racing; it’s a reminder that open innovation can level the playing field, especially when giants like KwaiAI share their playbook. Can’t wait to see what wild apps sprout from it.