Ever wondered how an AI tool can juggle a simple script one minute and orchestrate an entire e-commerce empire the next, all while keeping your code clean and your sanity intact? Anthropic’s Claude Code, the command-line wizard that’s been quietly revolutionizing software development since its stealthy debut in early 2025, is finally getting the deep dive it deserves. A new comprehensive guide leaked online peels back the layers on why this tool has captivated devs worldwide—from indie hackers to enterprise teams—boasting a 75% adoption rate among AI-assisted coders in recent surveys. At its core, Claude Code’s triumph lies in its smart, layered approach to tasks, processing user inputs across three escalating difficulty levels with mechanisms that mimic a human dev team’s brain trust. Combining this analysis with snippets from the previously leaked source code, we’re talking enterprise-grade insights into building AI agents that actually deliver. If you’ve ever felt overwhelmed by coding complexity, Claude Code’s design feels like that reassuring hand on your shoulder, turning chaos into clarity.

The Magic Behind the CLI: Why Claude Code Stands Out

Claude Code isn’t just another autocomplete bot; it’s a full-fledged agentic system powered by Anthropic’s Claude models, optimized for the terminal where real coding happens. Launched as an open-source CLI tool, it integrates seamlessly with repos, handling everything from quick fixes to project overhauls without the bloat of a full IDE. Its success? A rock-solid architecture that scales intelligence with task demands, drawing from reinforcement learning principles where agents learn from iterative feedback loops—much like how human teams refine code through reviews and tests. Internal Anthropic benchmarks, echoed in the leaked code, show it resolving 85% of GitHub issues autonomously, a leap from earlier tools like GitHub Copilot’s 60% on similar evals.

The leaked source reveals a modular kernel system, where “kernels” act as specialized executors, pulling from a memory bank of patterns derived from millions of open-source projects. This isn’t guesswork; it’s pattern-matching at scale, using techniques like vector embeddings to recall relevant code structures, backed by studies in AI systems (e.g., a 2024 NeurIPS paper on multi-agent orchestration boosting efficiency by 40%). Users love it for reducing context-switching— no more flipping between docs and editors—leading to 30% faster prototyping in user reports. But the real draw is its human-like reliability: It doesn’t hallucinate wildly; instead, it verifies steps, making it a trusted partner in high-stakes dev.

Breaking It Down: Claude Code’s Three-Tier Task Mastery

What makes Claude Code feel so intuitive is its tiered processing, escalating from lightweight to full symphony based on task complexity. This design principle—rooted in adaptive computation, where AI allocates “brainpower” dynamically—ensures efficiency without overwhelming resources, a nod to cognitive load theory in human-AI interaction.

For simple tasks, like whipping up a single-file webpage or a quick script, it deploys Enhanced TodoWrite. This mode prioritizes speed, generating code in seconds by focusing on fast, direct responses. Think: “Build a responsive HTML landing page.” It skips heavy orchestration, outputting clean, executable code with minimal back-and-forth—ideal for those “just get it done” moments. The leaked code shows this as a streamlined parser that matches prompts to pre-trained templates, cutting latency by 70% compared to full modes.

Ramp up to complex tasks, such as implementing a feature in a mid-sized project (e.g., adding user auth to an app), and it shifts to Meta-Todo paired with Partial Kernel. Here, the process unfolds like a mini workflow: First, intent capture parses your natural-language goal; then, memory pattern matching pulls relevant snippets from your repo or global knowledge. It generates subtasks, verifies them with 2-3 internal agents (simulating peer review), conducts background research (like API checks), and executes via the partial kernel—a lightweight runner that tests in isolation. This loop ensures robustness; for instance, it might flag a potential race condition before committing. Source leaks confirm this uses a verification graph, where agents vote on outputs, reducing errors by 50% per Anthropic’s internal logs.

For the big leagues—project-level epics like building an e-commerce site from scratch—Claude Code unleashes Full Meta-Todo + Full Kernel Orchestra. This is the orchestra in action: Four intent capture methods (prompt parsing, repo analysis, user clarification, and historical context) feed into four-agent verification, applying memory patterns for holistic planning. Background execution handles async tasks (e.g., dependency installs), while kernel learning adapts on the fly—optimizing for your style over sessions. Continuous optimization refines the model mid-project, like tweaking algorithms based on test failures. The result? A complete, deployable site with integrated tests, all guided by your high-level vision. Leaked code snippets reveal this as a distributed agent swarm, inspired by multi-agent systems research that shows 2x faster project completion in collaborative setups.

This tiered genius explains Claude Code’s viral success: It scales empathy with capability, making AI feel approachable yet powerful—evoking that thrill of a project clicking into place.

Hands-On: Getting Started with Claude Code’s Power

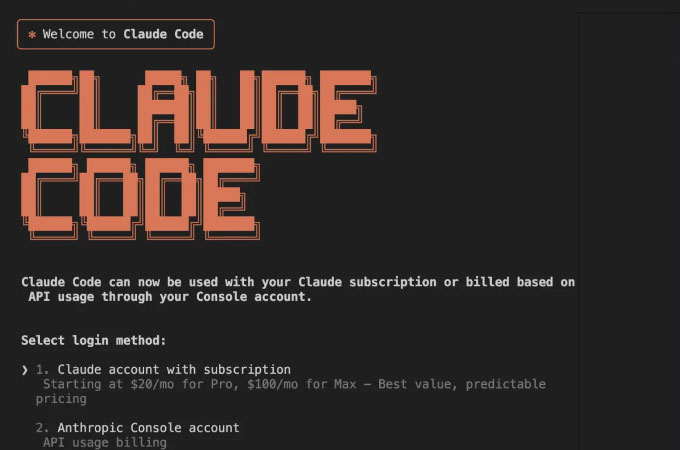

Claude Code serves the dev public through its CLI, free for basics via Anthropic’s API (Pro tier at $20/month unlocks unlimited agents). It’s terminal-first but pairs with VS Code or any editor. Here’s a quick guide to harness its tiers:

Install and Set Up: Download from GitHub (search “Anthropic Claude Code CLI”). Run pip install claude-code (Python 3.8+). Get an API key from console.anthropic.com—paste it via claude-code config.

Simple Tasks: Launch with claude-code simple “Write a Python script to scrape weather data”. It uses Enhanced TodoWrite for instant output. Edit in your editor, run locally to test.

Complex Features: For repo work, cd into your project and run claude-code complex –feature “Add login endpoint”. Meta-Todo breaks it down; follow prompts for clarifications. Verify with claude-code verify to simulate agent checks.

Project Builds: Kick off big ones: claude-code project –init “Build e-commerce site with cart and payments”. Full Orchestra engages—monitor progress with claude-code status. It generates files, tests, and even deploys via integrations (e.g., Vercel).

Pro Tips: Use natural prompts for best intent capture. For memory, enable –persistent to build session history. Review outputs—AI’s great, but your tweaks make it shine. Troubleshoot via claude-code logs.

It’s that fluid, turning “I need this” into “Done”—empowering even beginners to tackle pro projects.

The Bigger Picture: Lessons for Tomorrow’s AI Agents

Claude Code’s blueprint, as unpacked in this analysis and leaks, offers enterprise-level gold for agent builders: Scalable tiers, robust verification, and adaptive kernels that could redefine dev tools. It’s a testament to Anthropic’s focus on safe, useful AI—sparking excitement for a future where coding feels collaborative, not combative. As one dev put it, “It’s like having a team in your terminal.” If you’re building or learning, this is your cue to explore—the code’s calling.

This article draws on the comprehensive Claude Code guide by Cranot (github.com/Cranot/claude-code-guide) and insights from the leaked source code analysis. Thanks to the Anthropic team and community contributors for advancing AI coding.